Optimizing Touchscreen HMIs & Applications For Perspective

The Ignition Perspective Module’s visualization makes it easy to design applications for a variety of hardware types, including desktops, human machine interfaces (HMIs), and mobile devices. However, application development, design, and integration for HMI touchscreens differs from desktop and mobile use-cases. In this piece, we'll take a look at how touch events work with HMIs and the best practices for design and deployment of HMI applications and hardware using Perspective.

If you’d like to know more about selecting the right type of touchscreen HMI for your HMI application, check out OnLogic’s 7 Step Process to Selecting an Industrial Panel PC.

Basic Touch Functionality & OS-Specific Considerations

Modern operating systems are equipped to handle touch inputs out of the box. For comparison, I tested Windows 11 Pro, Ubuntu 22.04, and Ubuntu 24.04 operating systems for functionality consistency with OnLogic’s Tacton Panel PC platform.

The basic functionality consists of:

- A single press of the screen will act as a left mouse button click.

- Holding down a finger to the screen will act as the right mouse button click.

- Functionality of multi-touch gestures depends on the operating system and will only be consistent with capacitive screens.

In general, using a single press (i.e., left click) is the best way to have users interact with screens. It provides a consistent experience across all platforms. Gestures can be helpful for navigating map elements (pinch & zoom), but navigation should be designed to work across all platforms. As a reminder, gestures will only work consistently on capacitive touchscreens.

Perspective Events & Legacy Application Migration

You can further customize user interaction functionality based on Perspective Events. You can use events to execute a command based on an action that has happened. The event "onActionPerformed" allows users to execute a command when interacting with a component, such as a slider or one-shot button. There are also touch-specific events that can trigger commands based on specific input types, like when a user removes their hand from the screen.

This can be important when migrating legacy applications, such as those that may have previously relied on a resistive stylus and had commands executed when a stylus was removed from the screen. However, I would recommend not migrating this style of touch interface and moving to the standard “on-click” functionality. Since operators are already familiar with touchscreen interfaces on mobile and tablet devices, there should be minimal friction or relearning needed. EETI’s eGalaxTouch Windows utility has been tested by OnLogic for use with Tacton’s resistive touchscreens for those users that need to emulate legacy applications and inputs.

HMI Touchscreen & Gesture Best Practices:

- Stick to single touch (i.e., left click) for use where possible. This will provide the most consistent experience. Since a given fleet of devices may be heterogeneous, building applications that work well for all types of screens is ideal.

- Minimize components that require movement, such as swiping, sliders, etc.

- Do not use a touchscreen for momentary (i.e., “jog”) buttons for PLC communication. These are not consistent and can cause a tag value to get stuck in the on/off position. Consider using fail-safes, automating actions, or keeping these as physical buttons next to the panel.

- Space out and size buttons appropriately to make them easy to interact with. This will allow operators to interface consistently across different screen types and input devices.

- Gestures can be helpful, but you should not always rely on them. Build straightforward navigation with buttons that allow ease of use across platforms.

On-Screen Keyboard (OSK) & OS-Specific Considerations

When the HMI needs inputs from users that would otherwise use a keyboard, they can use an on-screen keyboard (OSK) to create a virtual keyboard to interface with when using the touchscreen. When enabled, the keyboard will show up when clicking into a text or numerical entry box. Both Windows and Linux distros offer a default OSK, but may require some setup depending on the application.

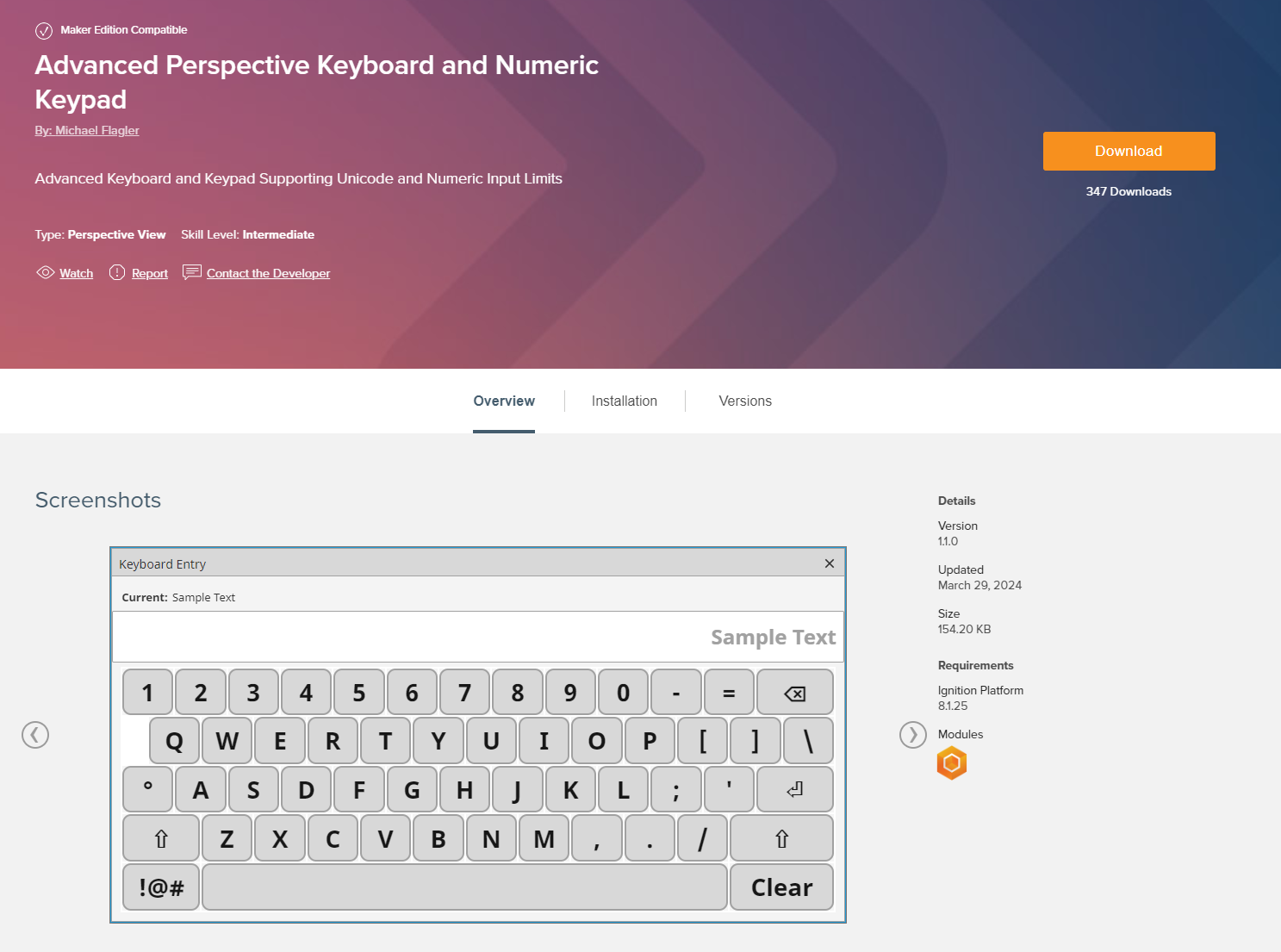

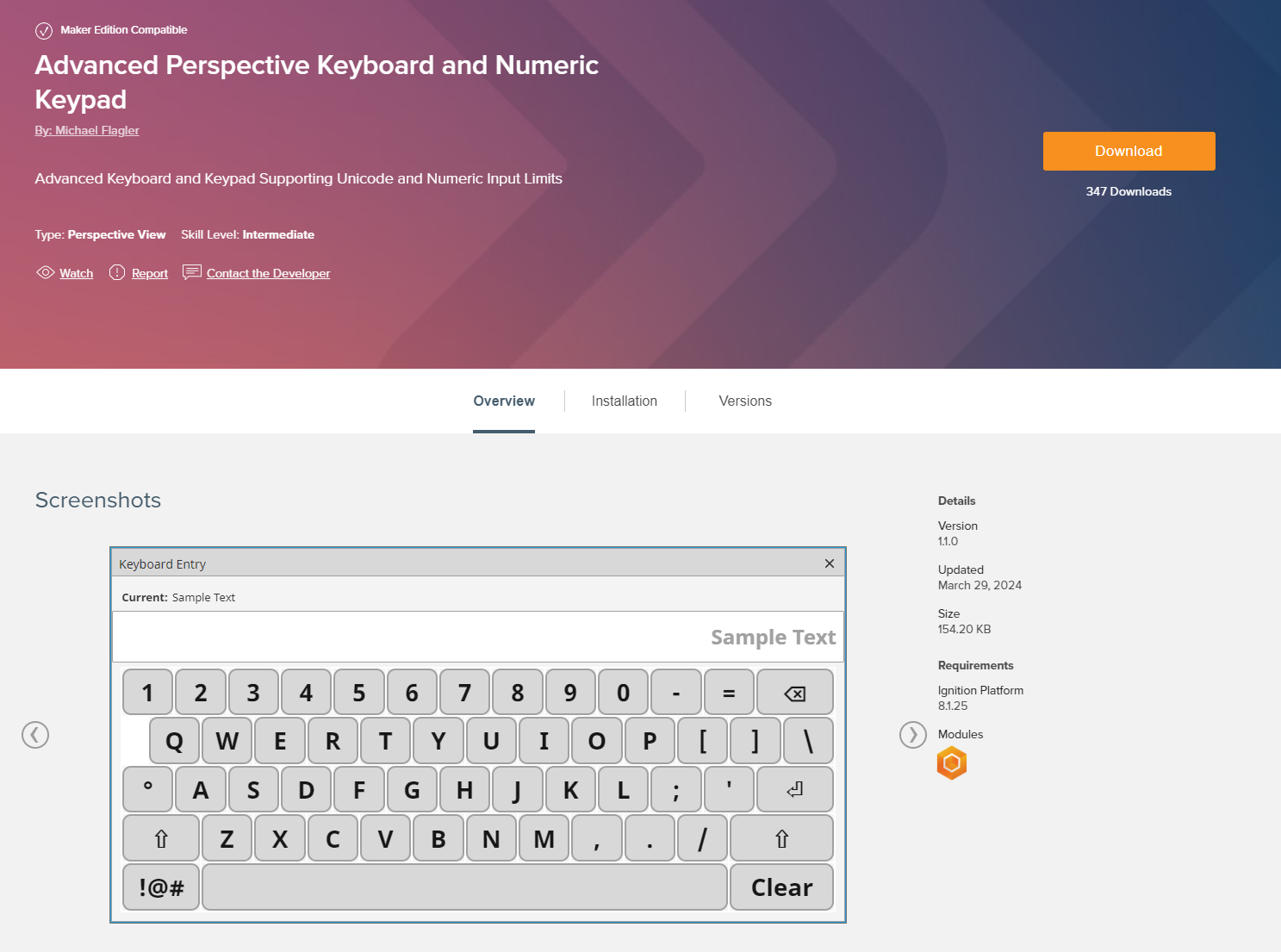

While Vision had an integrated touchscreen keyboard, Perspective requires additional components to accomplish this. For Perspective, we can either use the operating system’s built-in OSK or integrate it as a component directly into Perspective. There are pre-built Perspective components available on the Ignition Exchange for OSK and numpads. When integrating the keyboard directly into the Perspective session, disabling the OS’ built-in OSK is required.

Fig. 1. Ignition Exchange resource for on-screen keyboard as Perspective component

Windows Built-in Options: OSK vs. Touch Keyboard:

Fig 2. Windows Touch Keyboard has options to customize layout and size.

Somewhat confusingly, Windows offers both a standard OSK and a touch keyboard. Both options pop out as a window when needed, but the touch keyboard (shown above) offers much more flexibility and responsiveness in terms of customizing size and position. It is best to use the touch keyboard option when possible. Additionally, with Windows 11, you can add an icon to the taskbar that allows you to turn the keyboard off and on manually if desired. To use it properly, turn off the standard OSK in the keyboard section of the Accessibility Menu, turn on the touch keyboard (Time & Language > Typing), and add it to the taskbar (Personalization > Taskbar).

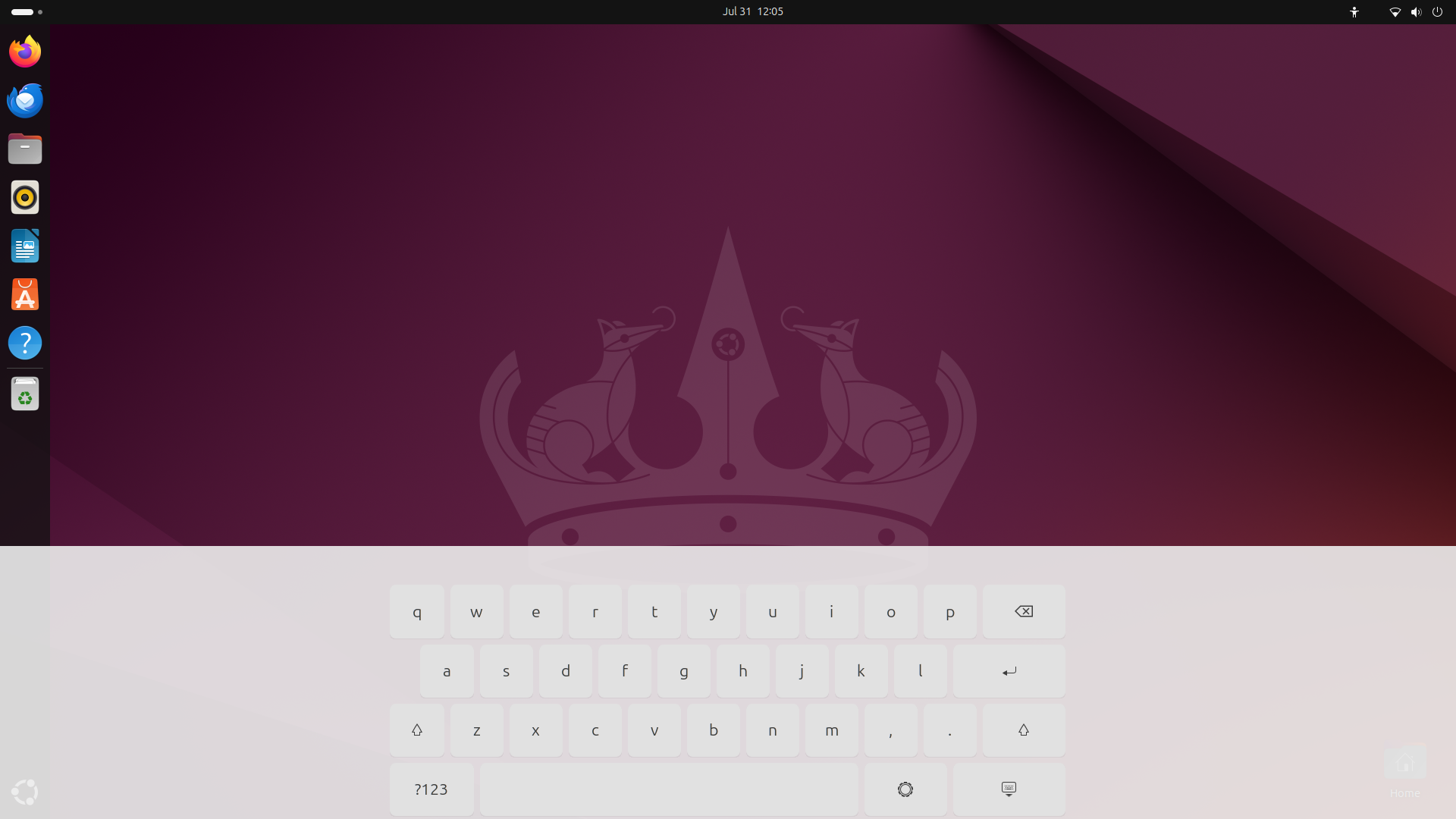

Linux OSK & Implementation: OSK vs. Touch Keyboard

You can enable the OSK in the accessibility system menu under the typing section. It will pop up from the bottom of the screen, occupying about one-third of the screen space, and you can manually bring it up by swiping from the bottom of the screen (PCAP screens only). This can present challenges as input boxes can become blocked by the OSK window.

Fig 3. Default GNOME OSK in Ubuntu 24.04 LTS

Customization of OSK functionality can be tricky depending on the particular Linux distribution. Most modern distributions (18.04+) use Wayland as a replacement for the X11 window system protocol, which is how Linux handles the graphics interfaces. This means that older tools that utilize x11 will not work with modern distributions when trying to deploy system-wide. It's important to consider how inputs will be made if the default OSK can't be used.

OSK Best Practices

- Using an OSK with a resistive screen is time-consuming and you should avoid it when possible.

- Typing repeatedly on a touchscreen is time-consuming. Barcodes, ID scanners, RF devices, and other input devices will automate data collection.

Wake-on-Touch

When a system is panel mounted, be sure to consider how to wake the system from a standby/suspend state. While Windows 11 supports “Touch on Wake” natively, the same option is not present for the default Ubuntu 22.04/24.04 environment or within Windows 10. One obvious solution is to disable sleep/suspend in the power settings of your operating systems; however, the Tacton platform offers some alternative hardware features that can help, regardless of the Windows or Linux environment.

- Infrared Proximity Wake: allows the embedded IR sensor to wake the system from sleep based on user proximity. The distance is enabled and customized via the LPMCU command-line interface tool.

- Remote Power Switch: A physical power button can be cabled out from the integrated DIO (pins one and two). A simple momentary switch will allow you to wake the computer.

Conclusion

As you can see, there are a lot of decisions to make when creating great HMI touchscreens. Careful forethought about how users will interact with your HMI applications, what controls they need, and how to make the interface most intuitive will help ensure your application is effective. If you’d like to learn more about OnLogic’s HMI solutions for Ignition, check out our line of hardware or reach out to speak to an OnLogic solution expert.